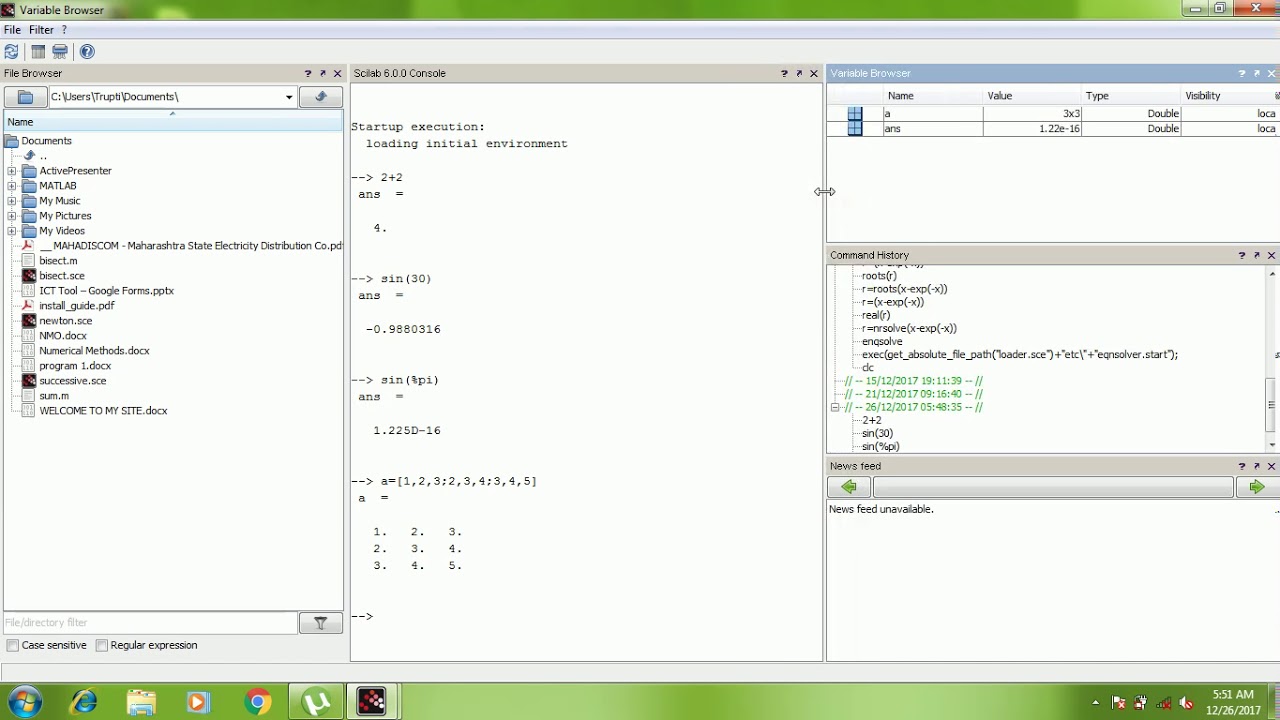

Power Spectrum Averaging is also called RMS Averaging. What this does is called "Power Spectrum Averaging": If you need the phase information, you have to make sure your acquisitions are in sync with the signal somehow. You definitely want to use the magnitude (you are already doing this when you multiply by the conj), as the phase information will depend on when your sampling began relative to the signal. So instead, just move the log10 to outside the for loop like so (I don't know scilab syntax): for filename in files:

Otherwise you essentially end up multiplying them instead of averaging.

The Rader–Brenner algorithm (1976) is a Cooley–Tukey-like factorization but with purely imaginary twiddle factors, reducing multiplications at the cost of increased additions and reduced numerical stability it was later superseded by the split-radix variant of Cooley–Tukey (which achieves the same multiplication count but with fewer additions and without sacrificing accuracy).I think you are close, but you should average the magnitude of the spectrums ( temp1_fft) before taking the log10. There are FFT algorithms other than Cooley–Tukey.įor N = N 1 N 2 with coprime N 1 and N 2, one can use the prime-factor (Good–Thomas) algorithm (PFA), based on the Chinese remainder theorem, to factorize the DFT similarly to Cooley–Tukey but without the twiddle factors. Main articles: Prime-factor FFT algorithm, Bruun's FFT algorithm, Rader's FFT algorithm, Chirp Z-transform, and hexagonal fast Fourier transform Also, because the Cooley–Tukey algorithm breaks the DFT into smaller DFTs, it can be combined arbitrarily with any other algorithm for the DFT, such as those described below. Although the basic idea is recursive, most traditional implementations rearrange the algorithm to avoid explicit recursion. These are called the radix-2 and mixed-radix cases, respectively (and other variants such as the split-radix FFT have their own names as well). The best known use of the Cooley–Tukey algorithm is to divide the transform into two pieces of size N/2 at each step, and is therefore limited to power-of-two sizes, but any factorization can be used in general (as was known to both Gauss and Cooley/Tukey ). This method (and the general idea of an FFT) was popularized by a publication of Cooley and Tukey in 1965, but it was later discovered that those two authors had independently re-invented an algorithm known to Carl Friedrich Gauss around 1805 (and subsequently rediscovered several times in limited forms). As a result, it manages to reduce the complexity of computing the DFT from O ( N 2 ) multiplications by complex roots of unity traditionally called twiddle factors (after Gentleman and Sande, 1966 ). An FFT rapidly computes such transformations by factorizing the DFT matrix into a product of sparse (mostly zero) factors.

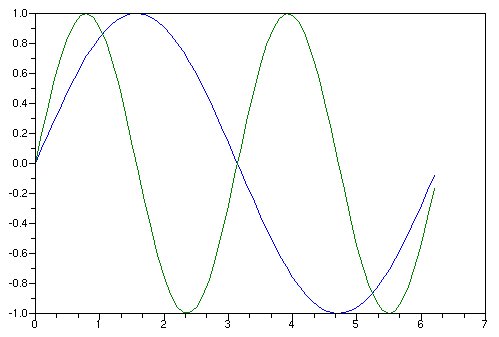

This operation is useful in many fields, but computing it directly from the definition is often too slow to be practical. The DFT is obtained by decomposing a sequence of values into components of different frequencies. Fourier analysis converts a signal from its original domain (often time or space) to a representation in the frequency domain and vice versa. An example FFT algorithm structure, using a decomposition into half-size FFTs A discrete Fourier analysis of a sum of cosine waves at 10, 20, 30, 40, and 50 HzĪ fast Fourier transform ( FFT) is an algorithm that computes the discrete Fourier transform (DFT) of a sequence, or its inverse (IDFT). For other uses, see FFT (disambiguation).

0 kommentar(er)

0 kommentar(er)